Theory

As the name findiff suggests, the package uses finite difference schemes to approximate differential operators numerically. In this section, we describe the method in some detail.

Notation

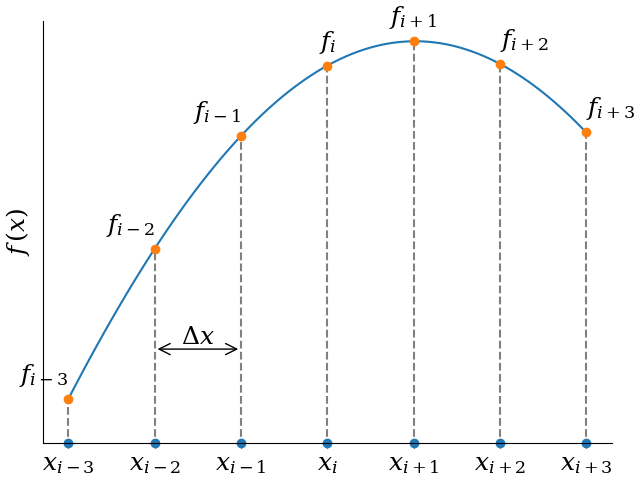

In this section, we are talking about functions on equidistant grids. Consider the following figure.

In 1D, instead of a continuous variable \(x\), we have a set of grid points

for some real number \(a\) and grid spacing \(\Delta x\). In many dimensions, say 3, we have

For a function f given on a grid, we write

The generalization to N dimensions is straight forward.

The 1D Case

Say we want to calculate the n-th derivative \(\frac{d^n f}{dx^n}\) of a function of a single variable and let the function be given on an equidistant grid. The basic idea behind finite difference is to approximate the true derivative at some point \(x_k\) by a linear combination of the function values around \(x_k\).

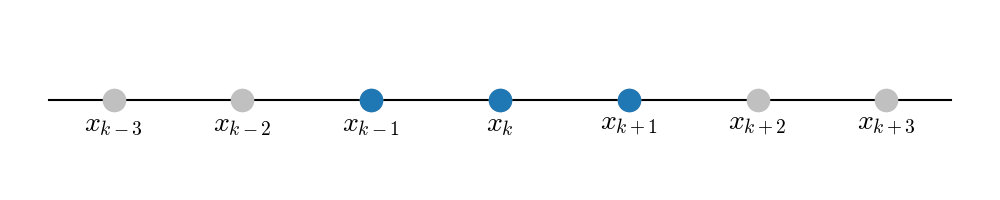

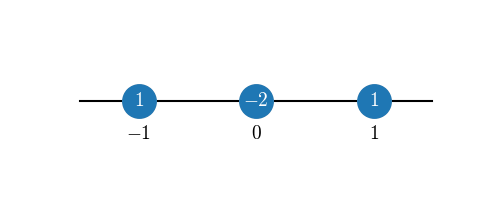

where A is a set of offsets, such that \(k+i\) are indices of grid points neighboring \(x_k\). Specifically, let \(A=\{-p, -p+1, \ldots, q-1, q\}\) for positive integers \(p, q \ge 0\). For instance, for \(p=q=1\), we would use the following (blue) grid points when evaluating a derivative at \(x_k\):

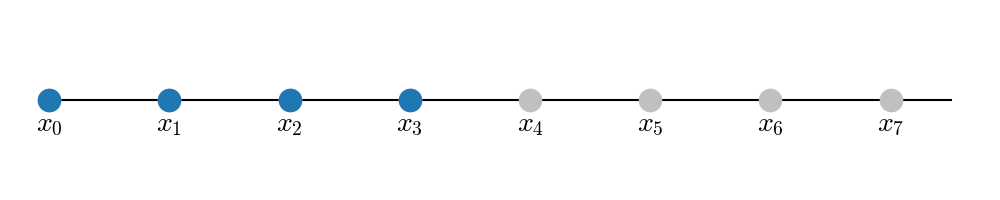

This is a symmetric stencil. Obviously, this does not work if \(x_k\) is at the boundary of the grid, because there would be no other points either to the left or to the right. In that case, we can use one-sided stencil, like the following forward-stencil (here, \(p=0, q=3\)), where we evaluate the derivative at \(x_0\) with four points in total.

For \(f_{k+i}\) we can insert the Taylor expansion around \(f_k\):

So we have

Now let us demand that \(M_\alpha = \delta_{k\alpha}\), where \(\delta_{k\alpha}\) is the Kronecker symbol. In other words, we have the equations (one for each \(\alpha \ne k\)):

or

and one equation for \(\alpha = k\)

or

If we take enough terms (\(p, q\) high enough), we can make more and more terms in the Taylor expansion vanish, thereby increasing the accuracy or our approximation.

For example, choosing a symmetric scheme with \(p=q=1\) for the second derivative, we get the equation system (with \(\Delta x = 1\))

which has the solution

so that

This expression has second order accuracy, i.e. the error is getting smaller as \({\cal O}(\Delta x^2)\).

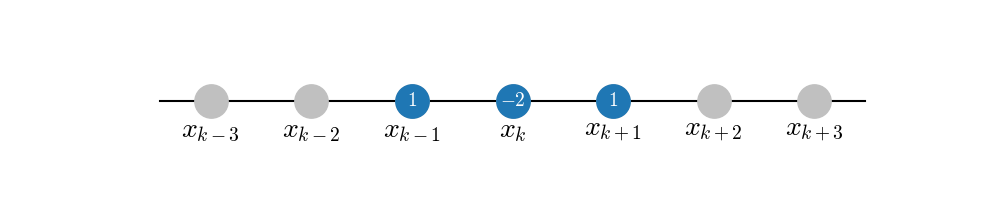

We can visualize this approximation compactly by inserting the coefficients in the stencil plot:

Or, even more compactly, dropping the unused grid points and writing only the offsets from \(x_k\):

Multiple Dimensions

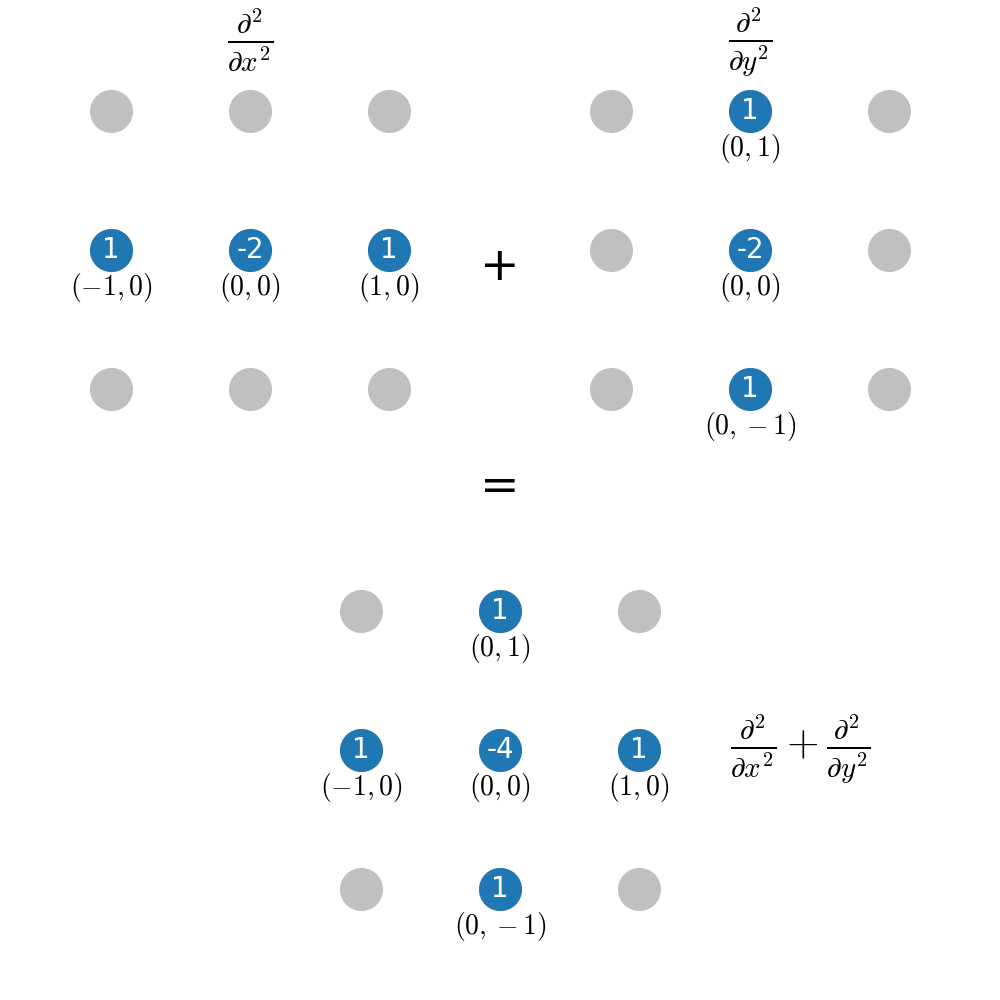

For functions of several variables, the same idea of approximating (now partial) derivatives as linear combination of neighboring grid points can be applied. It is just getting more cumbersome to write it all down, because a priori, in multiple dimensions, there is much more degree of freedom for choosing the shape of the stencil. However, it turns out that in most cases the “ideal” stencil is just the superposition of stencils in 1D. As an example, consider the 2D Laplacian

Our grid is now two-dimensional and we can reuse the stencil for the second derivative in 1D from the previous section:

It is not obvious that a superposition like this gives the “best” stencil in 2D with nearest neighbors only. However, it can be shown that this is indeed the case.